Since 2017 EcoCooling has been involved in an EU Horizon 2020 funded ground-breaking pan-European research project to build and manage the most efficient data centre in the world! With partners H1 Systems (project management), Fraunhofer IOSB (compute load simulation), RISE (Swedish Institute of Computer Science) and Boden Business Agency (Regional Development Agency) a 500kW data centre has been constructed using the very latest energy efficient technologies and employing a highly innovative holistic control system. In this article we will provide an update on the exciting results being achieved by the Boden Type Data Centre 1 (BTDC-1) and what we can expect from the project in the future.

The project objective: To build and research the world’s most energy and cost-efficient data centre.

The BTDC is in Sweden, where there is an abundant supply of renewable and clean hydro-electricity and cold climate ideal for free cooling. Made up of 3 separate research modules/pods of Open Compute/conventional IT, HPC and ASIC (Application Specific Integrated Circuit) equipment, the EU’s target was to design a data centre with a PUE of less than 1.1 across all of these technologies. With only half of the project complete, the facility has already demonstrated PUEs of below 1.02, which we believe is an incredible achievement.

The highly innovative modular building and cooling system was devised to be suitable for all sizes of data centres. By using these construction, cooling and operation techniques, smaller scale operators will be able to achieve achieve or better the cost and energy efficiencies, of hyperscale data centres.

We all recognise that PUE has limitations as a metric, however in this article and for dissemination we will continue to use PUE as a comparative measure as it is still widely understood.

Exciting First Results – Utilising the most efficient cooling system possible

At BTDC-1, one of the main economic features is the use of EcoCooling’s direct ventilation systems with optional adiabatic (evaporative) cooling which produces the cooling effect without requiring an expensive conventional refrigeration plant.

This brings two facets to the solution at BTDC-1. Firstly, in the very hot or very cold, dry days, the ‘single box approach’ of EcoCoolers can switch to adiabatic mode and provide as much cooling or humidification as necessary to maintain the IT equipment environmental conditions within the ASHRAE ‘ideal’ envelope, 100% of the time.

With the cooling and humidification approach I’ve just outlined, we were able to produce very exciting results.

Instead of the commercial data centre norm of PUE 1.8 or 80% extra energy used for cooling. We have been achieving a PUE of less than 1.05, lower than the published values of some data centre operators using ‘single-purpose’ servers – but we’ve done it with General Purpose OCP servers. We’ve also achieved the same PUE using high density ASIC servers.

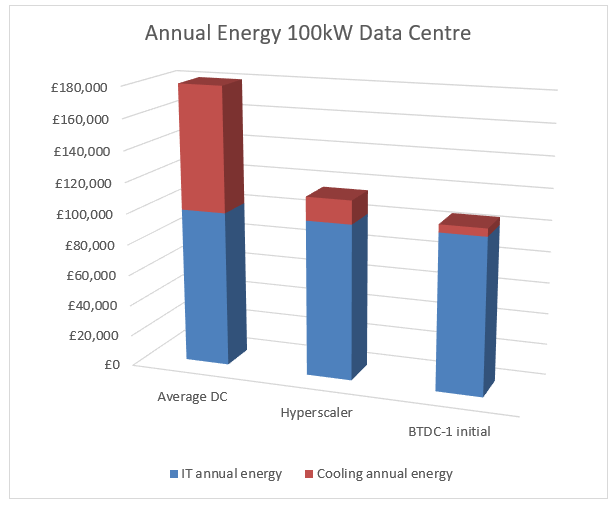

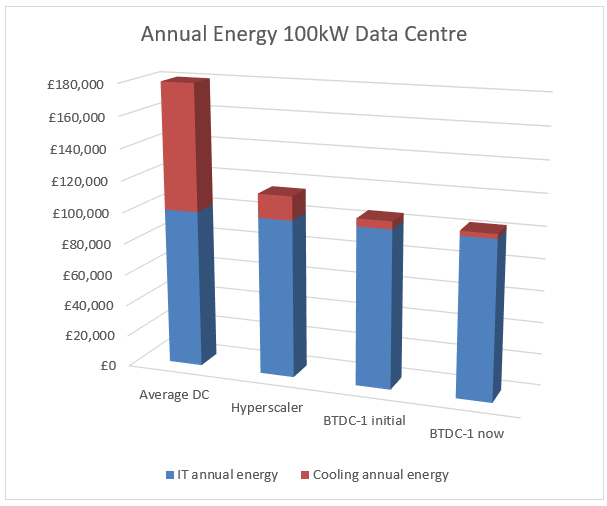

This is an amazing development in the cost and carbon footprint reduction of the data centres. Let’s quickly look at the economics of that applied to a typical 100kW medium size data centre. The cooling energy cost is dropped from £80,000 to a mere £5,000. That’s a £75,000 per year saving in an average 100kW medium size commercial data centre.

Pretty amazing cost (and carbon) savings I’m sure you’d agree.

Smashing 1.05 PUE – Direct linking of server temperature to fan speed

What we did next has had truly phenomenal results using simple process controls. What has been achieved here can be simply replicated in conventional server. The ultra-efficient operation can only be achieved if the main stream server manufacturers embrace these principles. I believe this presents a real ‘wake-up’ call to conventional server manufacturers – if they are ever to get serious about total cost of ownership and global data centre energy usage.

You may know that within every server, there are multiple temperature sensors which feed into algorithms to control the internal fans. Mainstream servers don’t yet make this temperature information available outside the server.

However, one of the three ‘pods’ within BTDC-1 is kitted out with about 140kW of Open-Compute servers. One of the strengths of the partners in this project is that average server measurements have been made accessible to the cooling system. At EcoCooling, we have taken all of that temperature information into the cooling system’s process controllers (without needing any extra hardware). Normally, processing the cooling systems are separate with inefficient time-lags and wasted energy. We have made them close-coupled and able to react to load changes in milliseconds rather than minutes.

As a result, we now have BTDC-1 “Pod 1” operating with a PUE of not 1.8, not 1.05, but 1.03!

The BTDC-1 project has demonstrated a robust repeatable strategy for reducing the energy cost of cooling a 100kW data centre from £80,000 to a tiny £3,000.

This represents a saving of £77,000 a year for a typical 100kW data centre. Now consider the cost and environmental implication of this on the hundreds of new data centres anticipated to be rolled out to support 5G and “edge” deployment.

Planning for the future – Automatically adjusting to changing loads

An integrated and dynamic approach to DC management is going to be essential as data centre energy-use patterns change.

What do I mean? Well, most current-generation data centres (and indeed the servers within them) present a fairly constant energy load. That is because the typical server’s energy use only reduces from 100% when it is flat-out to 75% when it’s doing nothing.

At BTDC-1, we are also designing for two upcoming changes which are going to massively alter the way data centres need to operate.

Firstly, the next generations of servers will use far less energy when not busy. So instead of 75% quiescent energy, we expect to see this fall to 25%. This means the cooling system must continue to deliver 1.003 pPUE at very low loads. (It does.)

Also, BTDC-1, Pod 1 isn’t just sitting idly drawing power – our colleagues from the project are using it to emulate a complete SMART CITY (including the massive processing load of driverless cars). The processing load varies wildly – with massive loads during the commuter traffic ‘rush hours’ in the weekday mornings and the afternoons. And then (comparatively) almost no activity in the middle of the night. So, we can expect many DCs (and particularly the new breed of ‘dark’ Edge DCs) to have wildly varying power and cooling load requirements.

Call to Intel, Dec, Dell, HP, Nvidia et al

At BTDC-1, we have three research pods. Pod 2 is empty – waiting for one or more of the mainstream server manufacturers to step up to the “global data centre efficiency” plate and get involved.

As a sneak peek of what’s to come in future project news, Pod 3 (ASIC) is now, using the same principles outlined in this article, achieving a PUE of 1.004. We are absolutely certain that if server manufacturers work with the partners at the BTDC-1 research project, we can help them (and the entire data centre world) to slash average cooling PUE’s from 1.5 to 1.004.

The opportunity for EcoCooling to work with RISE (Swedish institute of computer science) and German research institute Fraunhofer has allowed us to provide independent analysis and validation of what can be achieved using direct fresh air cooling.

The initial results are incredibly promising and considering we are only half way through the project we are excited to see what additional efficiencies can be achieved.

Footnotes

*EU code of conduct the average PUE is 1.8, see https://www.mdpi.com/1996-1073/10/10/1470/pdf page 7 of 18

*** Boden Type Data Centre One is A pan-European consortium consisting of data centre engineering specialist H1 Systems, cooling provider EcoCooling, research institute Fraunhofer IOSB, research institute RISE SICS North and infrastructure developers Boden Business Agency, all of whom have joined forces aiming to design and validate a future-proof concept.

For more information please contact sales@ecocooling.co.uk. Or for a live PUE and more info on the project go to the Boden Type website: https://bodentypedc.eu

The consortium will also be sharing the latest results in a number of conferences in the next few months, including DataCentre World Frankfurt and DataCentre Forum.